VSCode devcontainers and the Python AzureML SDK

Microsoft is making great strides in conquering our hearts and mingling with the open source eco-system. The same eco-system they openly worked against in the 00s. I confess I am in the process of being assimilated into the Microsoft world and am feeling good about it. In this blog I describe a development workflow for launching ML models in the cloud (AzureML) and developing code in VSCode wherever. I will convey reasons to like what is happening.

1 VSCode ML devcontainer

Being fairly late to VSCode it took me by surprise after my usual period of denial. The remote development functionality introduced in Q2 2019 (vscode-dev-blog) really sealed the deal for me. There are three flavors to this:

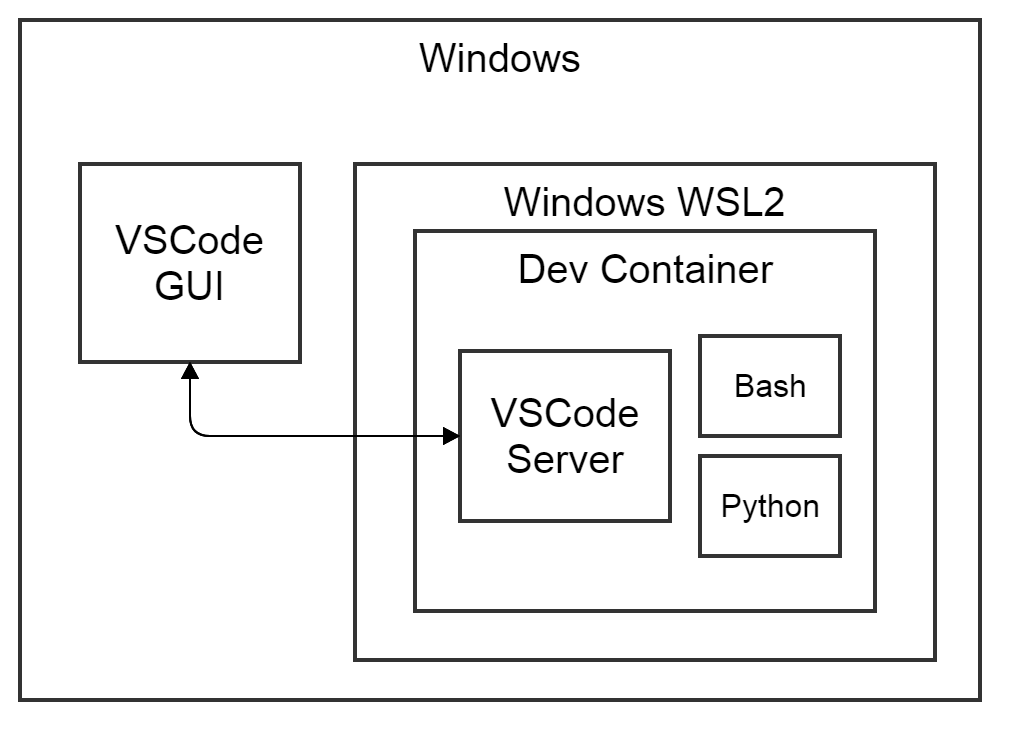

- Deploy the VSCode server within the Windows subsystem for Linux (WSL) and run the client on the Windows desktop

- Deploy the VSCode server within a Docker container and run the client on the Windows desktop

- Deploy the VSCode server to an SSH target and run the client locally

- You can run within a docker container on the SSH target (remote-ception)

This is very rich functionality which does not disappoint in reliability. Let us take a closer look.

1.1 VSCode and WSL2

Windows subsystem for Linux (WSL) has been around for years. WSL2 unifies running Linux based docker containers and WSL Linux on the same architecture (docker-wsl2-backend). Importantly it removed complex virtualization from the architecture and WSL Linux runs pretty much natively. VSCode can work on code on the windows filesystem and run its server on WSL; integrating seamlessly with Python interpreters and shells.

In this blog we suggest running the VSCode server inside a docker container on the WSL2 backend with the GUI running on the Windows desktop. WSL2 is the future and has benefits, such as Windows Home support (docker-windows-home), resource management and the potential for GPU and GIU support (WSL2-dev-blog). What we discuss also works on the historic Docker backend.

1.2 VSCode inside an AzureML devcontainer

Microsoft provides well maintained Dockerfiles containing common development tools for language and language agnostic scenarios (vscode-dev-containers). We selected the azure-machine-learning-python-3 container (azureml-dev-container), because of its Conda Python interpreter with the azureml-sdk package installed. The image derives from Ubuntu. If we include the `./.devcontainer` folder in any of our projects VSCode will:

- detect the presence of “./.devcontainer” and its contents

- build a container from the “./.devcontainer/Dockerfile`

- install the VSCode server and optional extensions

- connect with the container configured with “./.devcontainer/devcontainer.json”.

The settings for VSCode container integration are stored in `./.devcontainer/devcontainer.json` and allow any Dockerfile to be integrated without alteration.

1.2.1 Customizable

We can add our favorite development tools to these devcontainers as additional layers in the Dockerfile. Or we can build on top of a base image by calling “FROM” in our custom “Dockerfile”. For instance, adding the Azure CLI with extensions and Terraform with “RUN” commands. An image with your favorite development environment can be a good companion to have. Optionally we can manage our custom image in one place and provide symbolic links to it from each of our project.

1.2.2 Setup a VSCode Python AzureML project

- Install docker desktop on windows

- Install git for windows

- Install VSCode and its “Remote – Containers” extension

- Change directory to a suitable dev directory (here “dev”) on the windows filesystem

- git clone https://github.com/microsoft/vscode-dev-containers/tree/master/containers/azure-machine-learning-python-3

- within an existing Python project symlink the .devcontainer in Powershell

- New-Item -ItemType Junction -Path "c:\dev\path\to\your\project\.devcontainer\" -Target "c:\dev\vscode-dev-containers\containers\azure-machine-learning-python-3\.devcontainer\"

- Within the project base folder launch vscode with “code .”

- VSCode detects the “.devcontainer” and asks to re-open in container -> yes

- VSCode builds the container and installs its server and re-opens

- Confirm your environment in the lower left corner, a green square says “Dev Container: Azure Machine Learning”

1.3 VSCode devcontainer summary

We can develop using fully reproducible Linux tool chains and still work on a windows laptop. This is probably not convincing to a Linux or MacOS user, but if you become comfortable with these tools you will have them available almost anywhere. This is valuable if you work for companies that provide you with the infrastructure and a laptop. Another scenario would be training deep learning models with GPUs with your gaming desktop windows computer, as GPU support for WSL2 has been announced (WSL2-dev-blog).

2 Azureml Python SDK is fancy

A prerequisite for this section is an active Azure subscription. You can easily get a free azure account with starting credits if you can get hold of a credit card.

VSCode is now running within a devcontainer featuring Python and the AzureML Python SDK. The SDK contains an elaborate API spanning the entire lifecycle of an ML model. From exploration in notebooks, training the model and tracking to artifact management and deployment. This includes the provisioning and configuration of the compute for model training and the deployment targets for model scoring. Pheww.. Some of the tools (azure CLI) I’ll reference I added in my own branches (my-fork).

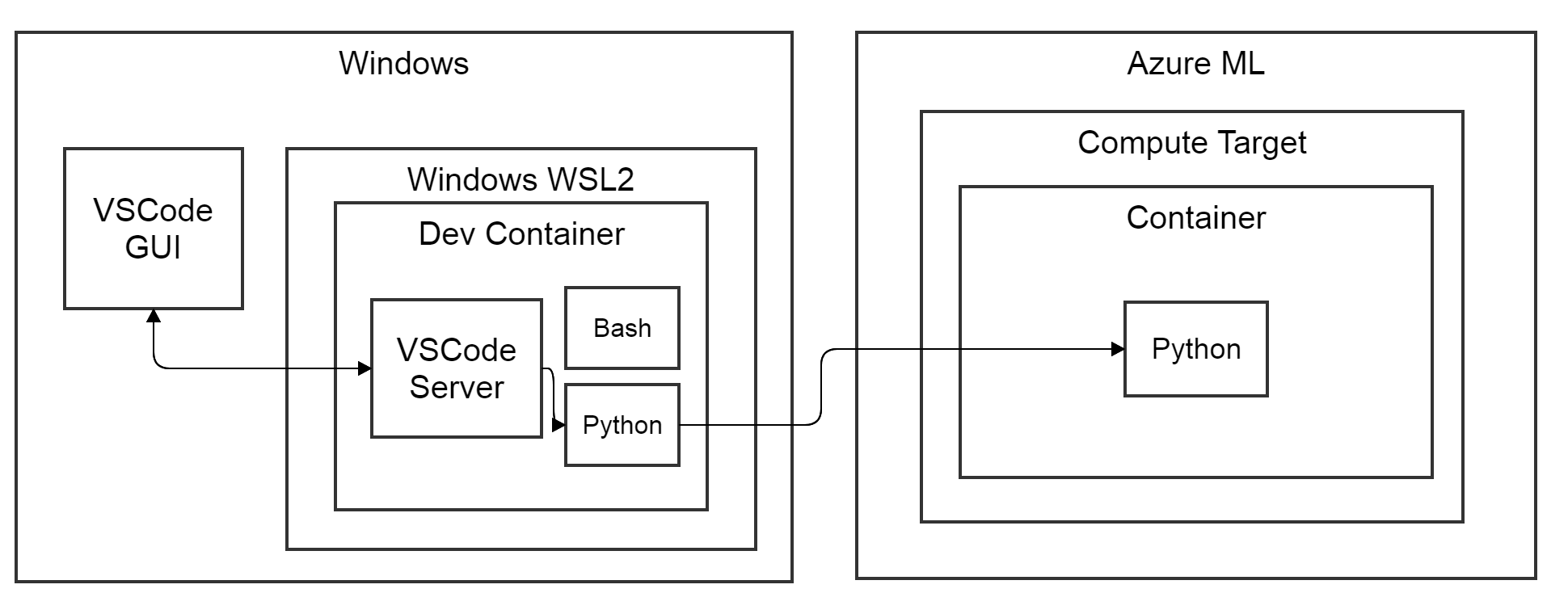

In the below diagram is what we are aiming at. We launch an experiment from VSCode using the AzureML SDK inside our decontainer and through the SDK executes code on the compute target. Depending on the details of the environment the compute target runs its code within a docker container as well. The specifics of the environment is something we will be discussing in Part 2 of this blog.

2.1 Register your container with Azure

- Open a terminal in VSCode: menu Terminal -> New Terminal

- Execute “az login” on the shell. Go to www.microsoft.com/devicelogin and fill in the secret provided in the console output

- We have now linked our environment with an Azure subscription

- The account information is stored in “~/.azure/azureProfile.json” within the container.

2.2 Show us the code!

Let’s look at Python AzureML SDK code to:

- Create an AzureML Workspace

- Create a compute cluster as a training target

- Run a Python script on the compute target

2.2.1 Creating an AzureML workspace

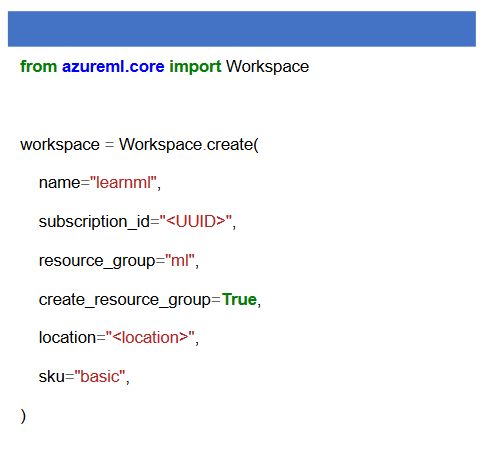

An AzureML workspace consists of a storage account, a docker image registry and the actual workspace with a rich UI on portal.azure.com. A new related Machine Learning studio is in open Beta and looks really good (https://ml.azure.com). The following code creates the workspace and its required services and attaches them to a new resource group.

2.2.2 Creating a create a computing cluster

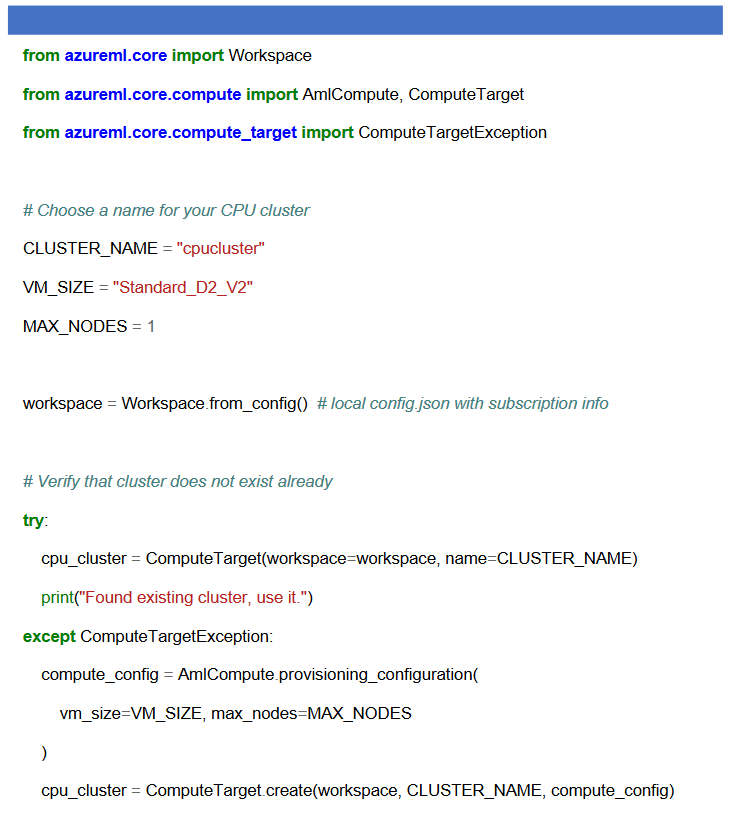

We need compute resources to train our ML model. We can create, orchestrate the compute clusters using the AzureML Python SDK. The workspace to create the resources is defined by config.json file in the working directory (configure-workspace). We create a compute cluster consisting between 0 and MAX_NODES virtual machines (VMs) running docker. “AmlCompute” stands for Azure Machine Learning Compute and is the configuration class for the cluster. We pass the provisioning configuration to the create method of the “ComputeTarget” class, which returns a ComputeTarget object. This object used whenever we want to perform computations. You can wrap this code as an exercise to make getting the compute_target reproducible.

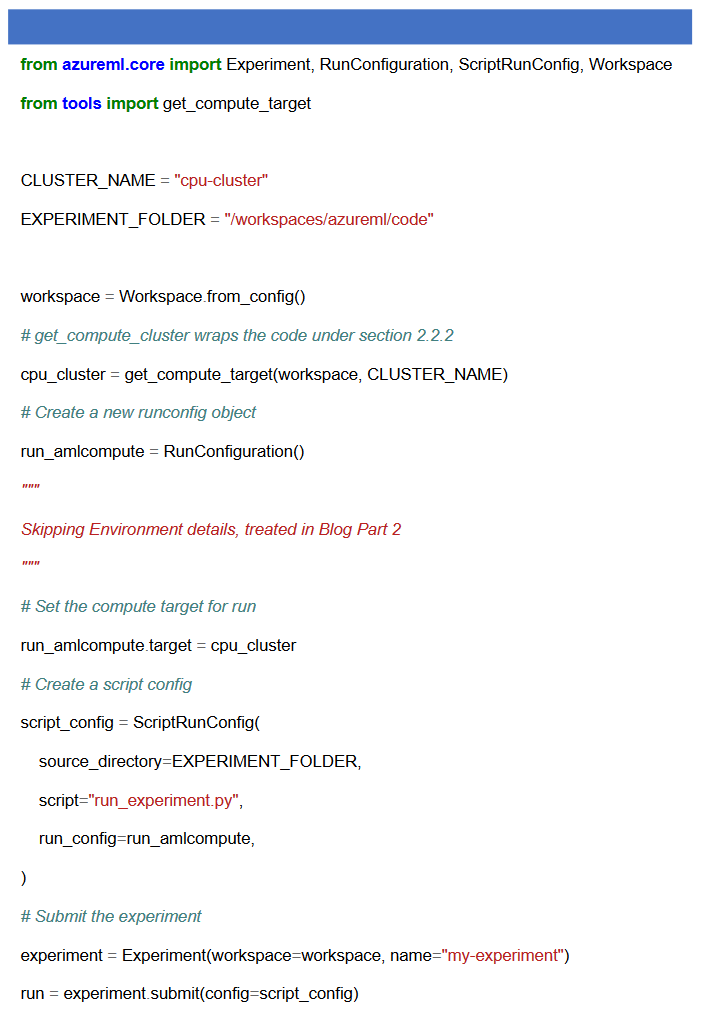

2.2.3 Run a Python script on the compute target

We want to run a Python script on our compute cluster. In part 2 of this series we will go into configuring the remote Environment, which involves Docker, Conda (amlcompute-docs). Here we skip over most of the details without breaking the code.

2.3 AzureML SDK summary

We have seen how easy it is to create an Azure ML service followed up by spinning up the compute cluster and running a Python script on it in the context of a tracked experiment within the ML studio. Part 2 of this blog series will discuss the AzureML Python run Environment (azureml-environment-docs) in detail. Follow up blogs will from training to deployment using the AzureML SDK.

3 Rounding up

Microsoft is all-in on Machine Learning and Python and this shows. For our workflow we have added Docker/WSL2 into the mix. From a developer perspective it is important that all the pieces work together reliably to allow actual work getting done. I am impressed with the quality of the various parts of the eco-system: Docker/WSL2, VSCode and AzureML and their integration into a workflow. Of course, there are some pitfalls along the way which is what we will end part 1 of this series on.

3.1 Some pitfalls of Docker and WSL2 on Windows 10:

- File permission mapping between Linux and Windows filesystem is odd (wsl-chmod-dev-blog)

- Bind mounts are under root:root

- Crontab users might have to touch windows Task Scheduler (wsl-scheduling)

- GPU support is on the way but not there yet (2020)

- Linux GUI application is on the roadmap but not there yet (2021)