We are Codebeez. We specialise in everything Python and help our clients build custom scalable, robust and maintainable solutions.

Whereas modern applications can exist as a set of connected docker containers and be reproducible, I am old-fashioned in the sense that I like having my own (customized) laptop for development.

However, the process of installing packages, cloning and installing git repos is long and tiresome, and I quickly forget the steps I took to get my environment working the way I want it to work. Having my local system as infrastructure-as-code, written down in an ansible playbook, is a very meticulous way of note-taking, ensuring my laptop setup is as reproducible as a docker image.

Furthermore, I am a big fan of using the mouse as little as possible. I do most of my work in, or close to, the terminal, and avoid anything where I need to point and click. While this is a great way to minimize friction during long code-stamping session, a box optimzied for keyboard-heavy development does not come for free. The price to pay is a lot of extra time spent on the setup. A lot of components that need to be made to play nice together before I can code distraction-free.

So now I want to touch upon how I set up this development environment, but also what is in it. Specifically, it covers the following topics:

- The ansible playbook I use to prep my environment.

- Why I prefer working on the terminal and the tools I use to accomplish it.

- tools I use specific to python development.

Ansible

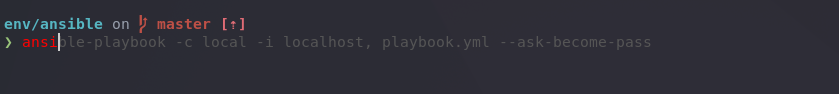

Ansible is an obvious automation choice because it's been around for a long time, has excellent community support, and can run on almost any type of guest machine. In this case, I wanted to make a playbook that I could run on my new (and any subsequent) laptops, as well as on a VM for the purposes of prototyping.

I designed my playbook assuming I'd be using Ubuntu 22.04 with the default Gnome, but with vagrant it is easy to test the setup on other versions.

The tasks that I ended up automating are:

- installing apt packages

- grabbing desired packages from the web (e.g. github) and installing them

- setting the user shell

- setting the services to run at startup

- installing python development tools

- replace the snap version of firefox with the

.debpackage - installing snap packages

- Apply my configurations by stowing dotfiles

- Setting keybinds and keyboards and configurations through dconf

The ansible playbook I am using can be seen here

I also like to use Vagrant for prototyping my Ansible playbook.

Vagrant lets me boot up a VM through the command line which I can ssh into, which fits in well with my terminal-based approach.

There is also the vagrant provision command which, assuming my Vagrantfile was set up correctly, reruns the ansible playbook which contains my entire setup.

An argument could be made for using a Docker image, and run the playbook on the container instead. But I find a Vagrant VM matches more closely what the ultimate goal system is going to look like, namely my local machine. Vagrant also has better ansible provisioning integration.

Terminal-based development

It's said that learning to forego using the mouse will speed up developer efficiency. The theory being that moving the hand from the home row to the mouse takes too long, so if you're able to replace point-and-click interaction with efficient keyboard shortcuts you become more efficient due to the miniscule time gains between writing a line of code and navigating to the next line.

I don't know if that's true. And if it's true, it'll take a while for these tiny gains to compensate for the time needed to retrain yourself to get used to this way of navigation. My personal attraction to keyboard-first development is far less measurable, and has to do with enjoyment and the ability to focus. Reaching for the mouse for me is like a micro-distraction, and breaks my concentration ever so slightly. But I have over time learned so many commands and keybinds that working with only the keyboard has become second nature. Also, I just enjoy it, because it facilitate reaching and staying in a flow state, so I can concentrate better and work for longer.

The next sections are about the tools I use to aid me in this workflow.

kitty terminal

Getting the terminal itself prepped is the first step.

Kitty is a highly customizable terminal which is designed to be operated using only the keyboard. Pretty much every keybinding is customizable; configuration is kept as plaintext so it's easily kept with the rest of my dotfiles. This philosophy extends to customization, so that color schemes and font choices can also be specified in a single plaintext file. There is of course support for tabs as well as splits. The window splitting of kitty for me is feature-complete enough that I never felt the need to get a terminal-multiplexer on top of it, but YMMV.

One thing of kitty compared to the 'default' terminal is that the former does not have a scrollbar.

This can be an inconvenience when reading far back into a very long terminal ticker.

The kitty way to do this is to use a scrollback pager (for me it's vim), which can be engaged using the show_scrollback instruction (ctrl+shift+h for me).

fish shell

The fish shell is one of the options for those who've used bash for a while and need some extra convenience features. It also comes with its own scripting language, which would require some getting used to. But to be honest whenever I need to do substantial scripting I just use python.

I must admit, the main killer feature which made me switch was its autosuggestions.

This feature is little more than an automatic reverse-i-search (aka CTRL+R in bash), but having it pop up automatically is a massive improvement.

fish autosuggestions gets it right a lot of the time, especially because it is directory-aware,

often when I've already forgotten how a specific cli was supposed to be called.

Autocompletion in fish is also a lot more intelligent, being able to complete files from any filename substring match (not just matching from the start of the file name). In git repositories it can also complete from substrings that match files in any subdirectory of the current repo.

fish isn't POSIX-compatible. As I've said, it has its own scripting language, which does some things different from bash.

The upshot of this is that shell scripts plucked from the internet will not work when sourced.

If this annoys you, you can either use zsh (supposedly not POSIX-compatible either but works most of the time)

or get in the habit of prefacing with bash -c (which will run a single command using bash).

command line tools

Over the last few years, there's also been a surge to replace tried-and-true command line executables with more modern, convenient alternatives. Often written in rust, these tools build on ubiquitous shell programs to make them easier to use. Some of these which I really enjoy using are:

- fzf. In pretty much any modern web app fuzzy searching has replaced conventional searching, but terminal behavior will still be to rely on start-of-string matching for specifiyng files.

fzfprovides the fuzzy search behavior into the terminal as an executable. Once I got used to calling as part of a command using pipes and subshells, it greatly improved by ability to quickly specify files multiple subdirectories deeps. It also incorporates into Vim via plugins. - zoxide. This tool is marketed as a

cdreplacement, but that does not appropriately sum up its functionality (for one, I still usecdalongside it).zoxide, or thezcommand, is an alternate way of jumping directories. Instead of using the current directory and its related directory tree, however,zmakes use of your past browsing based on a frecency (frequency + recency) algorithm. It matches directories you've previously visited to a search string. However, if there's multiple results, it picks the one which you've used most recently or need very often. This is remarkably efficient.zgets it right most of the time for me, but when you install it, it does need some "training data" to get going. - fd: I am never able to use

findcorrectly the first time. I always have to look up the arguments again that let me perform my most common use case. Not so withfdfind. Usingfdfind {search string}does a recursive search from the current directory for filenames matching the desired pattern, which is what I want most of the time. Results also pipe nicely intofzfThis tool is available through apt on Ubuntu 22.04. Aliasing it tofdis recommended. - exa: Essentially just a better

ls. It adds basic QoL features such as color-coding and icons to results, as well as optional git status into the long-form results. While it seems like a minimal benefit, the fact thatlsis my most-used command makes optimizing the results a good benefit. There's also a fisher plugin to automatically create a series of aliases to replace exa withls, but you can also set up the aliases yourself.

VIM

Vim is a text editor that is available on pretty much every GNU/Linux system. This is one of its strengths. If you're used to Vim, working on a malfunctioning box somewhere in the cloud can become a lot easier. Built on software (vi, ex) that predates the mouse, it is an ideal tool for keyboard-heavy users. However, it is not an IDE, and getting it to the level of a decent code editor requires a lot of configuration.

Getting Vim to work as a code editor would be an entire article (or article series) by itself. If you were interested in getting started with this, I'd recommed using neovim, which has saner defaults and a good plugin ecosystem for new users looking to get started.

First timers can consider one of the following options: - vscode-neovim. If you are currently using vscode, this is the way. Many editors provide "vim keybindings", usually referring to a subset of vim features such as modal editing with Normal mode being set up with commonly used Vim keybinds. This is usually insufficient. This extension on the other hand is actual neovim embedded (and integrated) in VSCode. This is a good way to get familliar with VIM keybindings. - kickstart.nvim. This is a pre-baked configuration you can build on top of. It is geared towards a neovim code editor setup. - LazyVim, a neovim distribution which bundles an entire setup for users that want something that 'just works'.

No matter what you pick, you will not get around having to do the work of adapting to a new way of editing code/text. Vim is old. It builds upon software which predates the mouse. It will do everything differently from what you are used to. While you can configure it to adapt to your way of working, it is better to change your way of working (and your way of thinking) to adapt to it, which is consistent and elegant, if alien and unfamilliar. This post goes into the VIM way of thinking. If you are just starting out, it will not be very useful to you. However, it is a good post to bookmark now and come back to later.

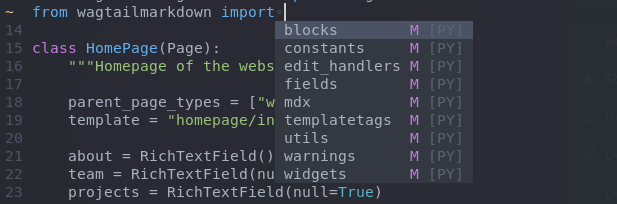

It should be noted that being a Vim user does not necessarily mean having to give up on IntelliSense code completion. The same extensions for completing (python) code available in VSCode can be ported to Vim. The language server protocol (LSP) was developed by Microsoft and VSCode is the most-used editor to implement it. But other editors have LSP support as well. For Vim, this is done through the plugin coc.nvim The Vim refactor neovim has native support, but still requires one or more plugins in order to configure providers for the desired programming languages. A downside for python is that pylance, the VSCode LSP implementation, is closed-source, the closest thing being the open-source pyright on which pylance is built.

python development tools

UPDATE: Much if this information is still applicable, but is superseded by new tools. I now recommend using uv as a replacement for all of the below tools, as discussed here

There are obvious tools which every python developer needs, such as linters and code formatters. As such, I don't see the need to cover them here. The tools that I've personally used and have made by day-to-day development more enjoyable aren't as ubiquitous. Setting up my development environment I made sure these tools are automatically included

pyenv

pyenv is a lightweight way of providing different python versions for different projects. This can be useful if, like me, you anticipate switching between different projects frequently, and the python versions for those projects might be different.

Different python executables provided by pyenv easy to integrate with virtual environments.

For example, pyenv provides pyenv-virtualenv,

which manages virtual environments, each tied to a particular python version.

But you can also connect pyenv python versions to poetry projects

which demand a very specific python version.

This is done through the use of a .python-version file, setup by using pyenv local.

A way to do this is provided below.

Pyenv is remarkably seamless to use after setup, and relieves developers from remembering where a specific python executable or virtual environment lives.

pipx

pipx provdes a single place to keep python executables to be ran from the command line.

I usually install python packages for one of two reasons: Software I'm working on has it as a dependency, or I need some sort of python command-line tool (e.g. a linter).

When flipping through virtual environments, I want those CLI tools to come with me.

Also, I generally want my venv to have only the dependencies specific to the code I'm working on (In case I ever need to pip freeze for something).

pipx is the ideal tool for this. It maintains CLI tools in virtual environments it manages itself, and then makes them available from the command line 'globally'.

Its usage is straightforward, being similar to pip. pipx install {package} gets the job done.

However, every install creates a new virtual environment. You might often want multiple packages to live in a single pipx venv.

To this end, pipx inject is the second important command you should know to successfully use this tool.

poetry

poetry is a python packaging a dependency management tool.

It makes it easy to ensure consistent dependency versions for different users running the same project,

but it does require the project to be configured with poetry in mind.

That is, it must be bundled with the pyproject.toml and poetry.lock files.

Poetry installation and use aligns well with the two utilities I've mentioned above.

That is, you can use pipx to install poetry, and pyenv to link poetry projects to versioned python executables.

To install the latest version of poetry (the one available on the apt repository will likely be out of date), run the following:

pipx install poetry

poetry -V # confirm the shell can find it and you have the latest version

An existing python project might have very specific requirements on a python version.

These will be specified in the pyproject.toml file.

We can use pyenv to ensure these requirements are satisfied.

This can be seen in the example below, which uses version 3.10.9.

pyenv install 3.10.9 # do this only once

cd /path/to/python/project/containing/pyproject.toml

pyenv local 3.10.9 # this generates a .python-version file

poetry env use python

poetry shell # ensures the poetry virtual environment is used by the shell

This works because the python executable is provided by pyenv at the time that poetry env use python is input.

Poetry will acknowledge this python executable when creating its virtual environment when checking against the python version required by pyproject.toml.

The poetry shell command will have to be rerun once per shell instance; everything else needs to be run only once per project.

Of course, the pyenv install command only has to be used once per python version.

pyflyby

In interactive notebooks, pyflyby can help if you always forget your import statements.

Having to put them in can be time-consuming,

especially if you have to put them in for every cell because you don't want to call your entire notebook in order each time.

pyflyby 'guesses' which library you are trying to call and imports them automatically.

It can also be configured to provide and recognize import aliases, e.g. import numpy as np

pyflyby also exports its functionality to the command line.

using ipython instead of the default interactive interpreter is a good idea to begin with as the latter is lacking most features you might take for granted.

pyflyby really takes it to the next level by providing auto-imports. The libraries it imports can be configured as needed,

but by default it will provide at least the python standard libraries and some popular third-party packages.

The end result of this is that I'm able to seamlessly build python REPL into my command

my favorite example being py 'uuid.UUID4())', which is a quick way of providing a producing a randomly generated UUID.

You can also consider using py 'random.random()' if you, like me, always forget how /dev/random is supposed to work.

Conclusion

There's other things I use on a regular basis that I did not mention here. But the core message is simply this: a development environment has a lot of parts, but if you code for a living, getting those parts just right pays a lot of dividend.

What I discussed here are some of those parts that I discovered, often reading other people's blogs, and brought into my workflow, and the development work I did has been smoother and more enjoyable as a result. So now I'm hoping to pass that knowledge forward. If you've read through all of this, thank you, and I hope you've found at least one thing you're considering to incorporate.